Test Case Refinement & Intelligent Gap Detection

As part of the AI-powered test generation process, DevAssure analyzes your design artifacts (e.g., Figma files, mockups) and functional specifications (e.g., feature requirements, Jira tickets). When discrepancies are detected, they’re flagged as gaps, helping you proactively address inconsistencies and improve test coverage.

Gaps

DevAssure identifies potential mismatches or omissions between your designs and documentation. These may include:

- Differences between design elements and requirement text

- Missing validations or user flows

- UI components without defined behavior

- Requirements not reflected in design files

- Jira tickets lacking corresponding design inputs

Resolving these gaps early helps reduce development rework and ensures a more aligned, testable implementation.

You can review, clarify, and resolve these gaps to generate more refined and accurate test cases.

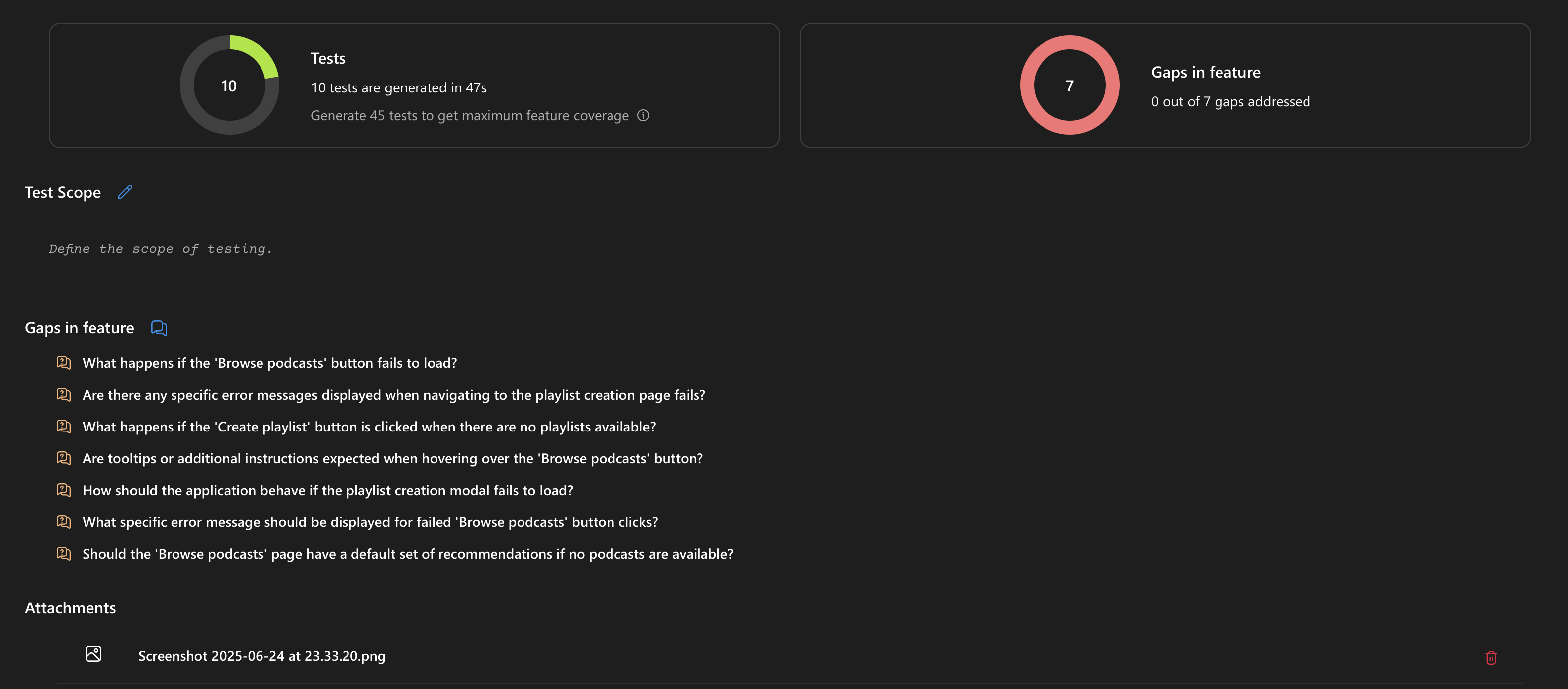

Reviewing Identified Gaps

- Click the Gaps icon in the top-right corner of the Test Generation interface.

- A red badge with a number (e.g., "7") indicates the total number of identified gaps.

- Clicking the icon opens a detailed list of gaps detected by the AI.

Each gap represents a potential inconsistency between the design and specifications that may impact test accuracy or completeness.

Resolving Gaps

DevAssure not only identifies discrepancies (gaps) between your design artifacts and specifications but also helps you resolve them through interactive input.

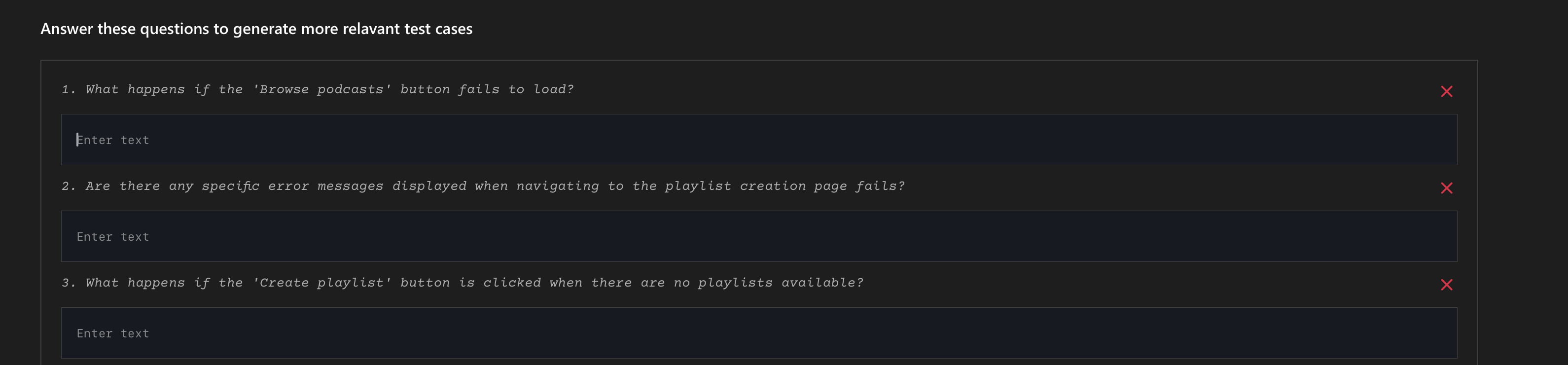

- Accessing Gaps and Questions: Clicking on the Gap indicator will not only reveal a list of identified gaps but will also display a set of questions designed to prompt you for further clarification.

- Interactive Refinement: Each gap includes a question asking for additional context (e.g., "What should happen when the user clicks this button?").

- Add Your Response: Use the "Add Response" text field below each prompt and click "Send" to submit clarification.

- Skip if Not Relevant: If a prompt is not applicable, you can skip it.

- Dynamic Refinement: Based on your responses, DevAssure re-analyzes the input and updates or enhances the generated test cases accordingly.

This ensures the test suite reflects both design intent and functional expectations with greater depth.

Review the generated test cases and click "Save Tests" to store them within your project. The generated test cases will then be listed under the "Test Cases" section. Clicking on a specific test case opens it in the editor for further review and editing.

🛠️ Troubleshooting

| Issue | Solution |

|---|---|

| Figma frames not detected | Ensure Figma access is authorized and frames are selected |

| Jira ticket not fetching | Verify the Jira connection and ticket permissions |

| No test cases generated | Ensure at least one input method is filled |

| Gaps list is empty | There may be no inconsistencies, or inputs may be too minimal |

Sample Use Case

Let’s say you want to test a Login Flow:

- You select the Login Screen in Figma.

- You enter the Jira Ticket ID:

AUTH-101. - You paste the following requirement:

"Users should be able to log in using username and password. Validation errors should be shown for missing fields or incorrect credentials."

- You define the scope: "Cover both success and failure login attempts."

- You attach a mockup of the actual login screen.

DevAssure will generate:

- Happy path test: Successful login

- Negative paths: Invalid credentials, empty fields

- UI validation: Button disabled states, field error highlights

- Gaps: If the design doesn’t show error messages but the requirement expects them