How to Deal with Flaky Tests in Automation Pipelines

Why do your tests pass locally but fail on CI with no real code changes?

Welcome to the world of flaky tests - those annoying, inconsistent tests that fail when you least expect it. Flaky tests have a way of showing up when you're least prepared for them. They pass until they don’t. Then pass again on the next rerun.

Google once shared that over 16% of test failures come from flakiness not actual bugs.

It’s the kind of issue that eats into your sprint time, triggers false alerts, and makes developers lose trust in automation.

In this blog, we’ll explore what flaky tests really are, why they happen, and how to spot and fix them before they mess with your release velocity.

What Are Flaky Tests and Why They’re a Problem

A flaky test is one that acts up inconsistently. It might fail on CI but work fine locally. Or it may only break in certain environments or order of execution.

That kind of unpredictability creates doubt. You start ignoring red pipelines or rerunning tests until they pass just to move on. Over time, this creates drag on your dev cycle. It pulls attention from real issues and adds noise that slows down feedback loops.

For high-performing teams, it’s not just a technical problem- it’s a trust issue.

Common Causes of Flaky Tests

Not every test failure points to a real bug. Sometimes, the test itself is unreliable. Here’s a quick overview of what usually causes test flakiness:

- Async Timing Issues: UI delays, missing waits, or hardcoded timeouts can break tests that rely on async flows especially under CI load.

- Order Dependency: Tests that pass only when run after others often share state or rely on leftover data. Parallel execution usually exposes this fast.

- Shared State or Pollution: When tests modify shared resources like databases or configs, things get messy. Behavior might differ between local and CI runs.

- Unstable External Services: Hitting real APIs, flaky mocks, or dealing with slow networks can lead to false failures that aren’t code-related.

- Random or Uncontrolled Test Data: Random values without proper seeding can create inconsistent results and make bugs hard to reproduce.

How to Detect Flaky Tests (Manual + Automated)

Flaky tests are tricky because they don’t always fail. You can’t spot them just by looking at a single red build. Here are a few ways teams catch them both manually and with tooling.

Rerun Suspicious Failures

The simplest way? Run the test again. If it passes on the second or third try, and nothing has changed, it’s probably flaky. This isn't foolproof, but it's often how the problem first gets noticed. Some teams even have CI setups that auto-rerun failed tests to reduce false alerts.

Use CI Logs and Retry Patterns

CI systems hold clues. If the same test keeps failing in one out of every five runs, it’s a red flag. Look at retry logs, timestamps, and test durations. A flaky test often shows up as intermittent, slow, or failing under specific load conditions.

Watch for Patterns

Flaky tests leave behind signals if you know where to look.

- Does the failure only happen on certain OS or browsers?

- Is it tied to parallel execution?

- Do failures spike during certain hours or after other tests?

The more runs you collect, the clearer the pattern becomes.

Use Tools to Surface Flakiness

Some tools now flag flaky behavior automatically. Jenkins has plugins like Flaky Test Handler that rerun tests and track stability over time.

Platforms like DevAssure do more than flag test failures. They help distinguish flaky tests from real issues by analyzing behavior across your pipelines. You also get visibility into flaky clusters, historical failure trends, and early alerts before things get out of hand.

Want to stay ahead of flakiness? See how DevAssure helps teams reduce noise and regain trust in their test pipelines. Schedule a demo to learn more.

How to Fix Flaky Tests Step by Step

Got a test that fails for no obvious reason? Don’t worry, here’s a step-by-step guide to help you fix flaky tests without losing your mind.

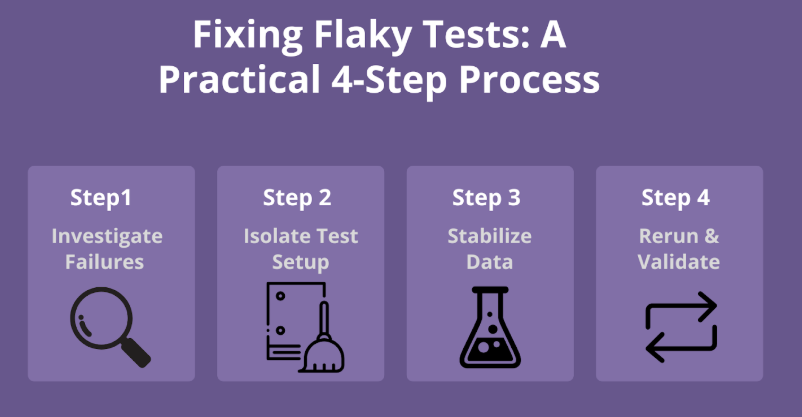

Fixing Flaky Tests – 4 Key Steps Image Alt Text: Step-by-step process to fix flaky tests including analysis, cleanup, data stability, and reruns

Dig into What’s Really Going On

Run the test again and again. Does it always fail in parallel runs? Only on CI? Maybe just when another test runs before it? Flaky behavior usually points to something hiding under the surface—timing issues, leftover data, or an unstable mock. Check the logs. See what changed. You’ll often catch a clue when you slow things down.

Clean Up Test Setup

Does the test rely on hardcoded delays or shared state? Fix that first. Make sure mocks are reliable. Remove any global variables. Reset everything between runs. Tests should behave the same no matter when or how often they run.

Watch Your Data

Random values are useful for coverage, but if they’re not controlled, they can cause trouble. Add a seed if you’re using random data. Use fixtures instead of ad-hoc values where possible. Unpredictable inputs = unpredictable results. Keep it clean and repeatable.

Don’t Just Run It Once

You fixed it? Great. Now run it ten more times. On CI. Locally. In parallel. If it holds up, you’re probably safe. If not, you might’ve only fixed the symptom. Some teams even reintroduce flaky tests slowly just to be sure the fix sticks.

Preventing Flaky Tests in CI/CD Pipelines

Fixing flaky tests is great. Not having them in the first place? Even better. Here are a few ways to keep them from creeping into your pipeline in the first place:

Keep Tests Isolated and Independent

No test should rely on another. If your tests depend on shared state or order of execution, you’re already on shaky ground. Use clean setups and tear things down properly after each run. Think: every test should start fresh, like it’s the only one running.

Watch Your Environments

What runs fine on your machine might break on CI. That’s usually a sign your environments are out of sync. Try to keep local and CI setups as close as possible—same configs, same data, same services. Docker or containerized test runners help reduce the drift.

Use Stable Test Data

Avoid flaky inputs. If you’re generating test data on the fly, make it predictable. Seed your generators. Use fixtures or snapshots when you can. Tests should fail when something’s truly broken not because a random value went sideways.

Avoid Hard Waits (and Other Shortcuts)

That sleep(2) might work today but tomorrow, it could make your test fail. Instead of waiting blindly, wait for the condition to be met: the element to load, the API to respond, the state to change. Tests need to be patient and smart, not lazy.

Set a Flakiness Budget

Some teams track flakiness as a metric just like test coverage or deployment frequency. If a test fails more than, say, 5% of the time without a code change, it’s flagged. This helps catch flakiness before it spreads and slows down the team.

DevAssure’s Approach to Dealing with Flaky Tests

DevAssure is purpose-built to help teams identify, manage, and reduce flaky tests at scale without slowing down development. It goes beyond simple retries or logs by offering intelligent detection, automatic quarantine, contextual debugging, and real-time dashboards.

With DevAssure, engineering teams gain the clarity and control needed to maintain stable pipelines even as test suites grow more complex. Here’s how:

Smart Detection Across Pipelines

Flaky tests don’t always fail consistently. DevAssure analyzes historical patterns to detect tests that behave unpredictably over time. It flags tests that cross a failure threshold so teams can act before it spreads.

Intelligent Auto-Quarantine & Retry

DevAssure can automatically quarantine flaky tests, allowing the pipeline to proceed without false blockers. These tests are still tracked in the background with retry logic and stability metrics.

Context-Rich Diagnostics

Each flagged test includes contextual signals—failure rates, run history, environment scope, and timing patterns. This helps teams quickly understand whether an issue is code-related or just unstable behavior.

Developer-Friendly Dashboards

The dashboard visualizes flaky test clusters, pass/fail ratios, and platform-specific insights giving teams a clear picture of test health across projects.

CI/CD Workflow Integration

DevAssure integrates directly into your CI/CD tools like GitHub Actions, Jenkins, or GitLab. It fits into your existing flow, managing retries, tracking quarantined tests, and reporting cleanly without disrupting deployments.

Curious how DevAssure fits into your pipeline? Start exploring or schedule a quick demo to see it in action.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?

Detect Early, Fix Fast, Prevent Smarter

Flaky tests are sneaky. They don’t break things loudly but they quietly slow teams down, chip away at confidence, and bury real bugs under layers of noise.

The best way to handle them? Catch them early. Fix them fast. And build smarter systems to keep them from coming back. That means writing better tests, using stable environments, and watching for signs of flakiness as part of your regular workflow not just when the pipeline turns red.

More importantly, it calls for a mindset shift: test reliability is product reliability. If your test suite can’t be trusted, neither can your release.

DevAssure is built to help with that. It gives teams the visibility, automation, and orchestration they need to manage flakiness at scale without disrupting the flow of shipping.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?