Autonomous QA in 2026 - How Agentic AI Is Redefining Software Testing

You know that sinking feeling when a routine release turns into a bug hunt marathon. One minute you’re merging code, the next you’re untangling a cascade of flaky test failures that never should have made it out of staging.

Even teams with solid automation still wrestle with brittle suites, maintenance overload, and regressions that slip into production like ninjas in the night. Traditional automation was meant to be our safety net, but lately it feels more like a treadmill that keeps speeding up.

A study revealed that nearly half of IT teams have automated only around 50% of their test processes, even after years of investing in tools and frameworks.

So where’s the gap? Why are legacy testing practices buckling under today’s release velocity, and what does autonomous QA powered by agentic AI do differently as we head toward 2026?

What Is Autonomous QA?

At a basic level, autonomous QA is about testing systems that don’t wait around to be told what to do.

Traditional automation runs exactly what you script. If something changes and the script breaks, it fails. End of story. Autonomous QA works differently. It looks at the application, figures out what’s changed, and decides what needs to be tested next.

Autonomous QA uses AI-driven agents that can create, run, maintain, and refine tests with very little hand-holding.

These systems watch how the product behaves, notice patterns in failures, and adjust their testing strategy as the codebase evolves. Over time, they get better at knowing where things tend to break and where they don’t. That ability to adapt on its own is the real shift.

So how is this different from test automation?

Test automation is great at execution. You give it instructions, it follows them. No guessing. No judgment. That also happens to be its biggest limitation.

Autonomous QA adds a thinking layer on top of execution. Instead of blindly running everything, it can:

- Zoom in on high-risk areas after a code change

- Create new test scenarios instead of recycling outdated ones

- Fix itself when a selector or flow changes

- Reorder test runs based on impact, not just coverage numbers

Put simply, automation runs what already exists. Autonomous QA helps decide what should exist at all.

Where agentic AI comes into play

This shift is driven by agentic AI, which is a fancy way of saying AI systems that can act with intent, context, and feedback.

In a QA setup, that means agents that can:

- Understand changes across UI, APIs, and workflows

- Tell the difference between expected behavior and something that feels off

- Take action without waiting for someone to rewrite a test

- Learn from past failures and avoid repeating the same mistakes

They don’t replace your team. But they do take over a lot of the repetitive thinking that drains time and focus.

Why Teams Are Paying Attention: Benefits of Autonomous QA

Before getting into how autonomous QA works under the hood, it helps to understand why teams are even looking in this direction. Here are some benefits of Autonomous QA:

Less test maintenance, fewer false alarms: Autonomous QA systems can self-heal tests when UI flows or locators change. That means fewer flaky failures and far less time spent babysitting test suites after every minor update.

Smarter coverage without bloated test suites: Autonomous QA focuses on high-risk paths based on code changes, usage patterns, and past defects. Coverage improves, but the suite doesn’t grow uncontrollably.

Faster, more meaningful feedback in CI/CD: Autonomous QA prioritizes what to test first, so pipelines return signal quickly instead of drowning teams in noise. That’s critical when releases are frequent and rollback windows are small.

QA effort shifts from maintenance to thinking: When repetitive upkeep is handled by AI agents, testers can focus on exploratory testing, edge cases, and business logic. The work that actually benefits from human judgment.

Better alignment between dev, QA, and release velocity: Autonomous QA fits naturally into modern delivery workflows. It adapts as the product evolves instead of forcing teams to constantly adapt their tests. That alignment is what makes it viable at scale, not just in demos.

How Agentic AI Works in QA

At a high level, agentic QA follows a clear sequence – one that starts with understanding, not execution. Here’s what that process actually looks like in practice.

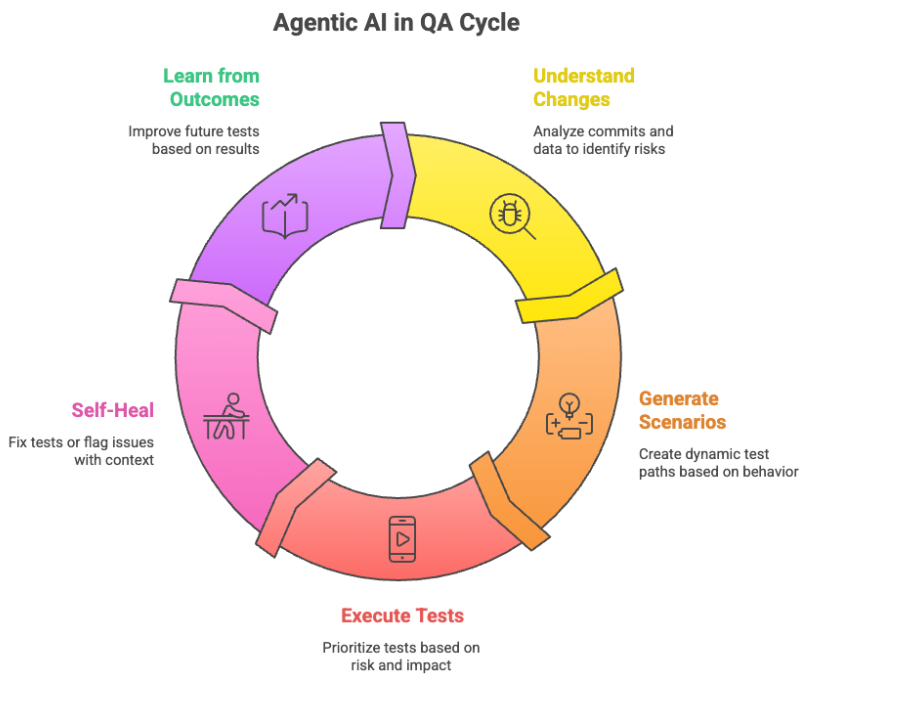

Agentic AI QA lifecycle

Image Alt Text: Agentic AI QA cycle showing understand changes, generate tests, execute, self-heal, and learn

Step 1: Understanding what actually changed

Every release introduces change, but not all changes carry the same risk. Agentic AI looks at commits, builds, UI diffs, API schema updates, and historical failure data to answer a simple question: what’s most likely to break this time? This alone cuts down a lot of wasted test runs.

Step 2: Generating test scenarios, not just test cases

Instead of replaying the same scripts, the agent creates scenarios based on behavior. If a new flow is introduced or a dependency shifts, it can generate fresh test paths on the fly. No waiting for someone to sit down and script everything manually.

Step 3: Executing tests with intent

Execution isn’t random. Agentic systems prioritize tests based on impact, risk, and past defect patterns. High-risk paths go first. Low-value checks don’t block the pipeline. This is especially useful in CI/CD setups where time is tight and signal matters more than raw coverage.

Step 4: Self-healing when things inevitably break

Selectors change. APIs evolve. Flows get refactored. Instead of failing hard, agentic AI attempts to understand why something broke and fixes the test where possible. When it can’t, it flags the issue with context instead of dumping logs and stack traces.

Step 5: Learning from outcomes

This part is easy to overlook, but it’s critical. Agentic AI learns from test results, production issues, and missed defects. Over time, it gets better at spotting risky areas and ignoring noise. The more it runs, the sharper it gets.

Related Reading: How Software Testing Has Evolved Over the Past Decade

How Autonomous QA Changes Roles in a QA Team

Autonomous QA doesn’t replace testers, but it does change where their time goes. Execution and script maintenance fade into the background, while thinking, judgment, and strategy move to the front.

- Less manual execution and repetitive scripting

- More focus on test strategy, edge cases, and business logic

- Earlier involvement in product and architecture decisions

- Cleaner, context-aware feedback for developers

- New emphasis on risk modeling, AI oversight, and guardrails

What Tools and Approaches Are Leading This Shift?

Autonomous QA isn’t driven by one type of tool. It’s emerging from a combination of capabilities working together. Here’s a clear way to think about what’s shaping this shift:

| Category | What It Does | Why It Matters |

|---|---|---|

| AI Agent Platforms | Coordinate autonomous actions across test creation, execution, and learning | Enable end-to-end autonomy instead of isolated automation |

| Self-Healing Test Frameworks | Automatically fix broken locators and flows | Reduce flaky failures and maintenance overhead |

| LLM-Driven Test Generation | Create test scenarios from behavior, logs, or specs | Replace manual scripting with adaptive coverage |

| Risk-Based Test Prioritization | Rank tests based on change impact and defect history | Faster feedback with higher signal |

| Observability & Feedback Loops | Learn from test outcomes and production issues | Improve test relevance over time |

| Governance & Guardrails | Control what AI can change or execute | Keep autonomy safe and predictable |

Challenges and Limitations of Autonomous QA

Autonomous QA isn’t magic. It’s powerful, but only when used with intent.

- Data quality still matters. AI agents rely on code diffs, logs, and historical failures. Poor inputs lead to poor decisions.

- Business context can be missed. Agents spot patterns well, but they don’t fully understand domain nuance or regulatory edge cases.

- Over-trust is a real risk. Green pipelines feel good, even when coverage is shallow. Autonomy needs visibility and guardrails.

Best Practices for Adopting Autonomous QA Safely

Here are a few best practices teams follow to adopt autonomous QA safely and effectively:

- Introduce autonomy in stages. Pilot it on regression or high-churn areas first.

- Keep humans in the loop. Let AI suggest and execute. Let people validate strategy.

- Set clear boundaries. Define what agents can change, generate, or prioritize.

- Measure outcomes, not hype. Track defect leakage, signal quality, and maintenance reduction.

Where Autonomous QA Actually Lands

Autonomous QA isn’t some grand replacement story. No one’s taking testers or developers out of the loop.

What is changing is the amount of friction teams are willing to tolerate just to keep quality afloat. Endless script maintenance, noisy pipelines, and regressions that show up late in the cycle don’t scale anymore.

As systems get more distributed and releases get tighter, static automation starts to feel fragile. Agentic AI adds something automation never had – the ability to adjust when the ground shifts. Tests evolve with the product, instead of constantly playing catch-up.

This is where modern QA platforms are starting to matter. Tools like DevAssure are built around this shift, combining autonomous testing, intelligent prioritization, and self-healing workflows so teams can focus on shipping confidently instead of managing test debt.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?