Flaky Tests from Race Conditions- Root Causes and Fixes

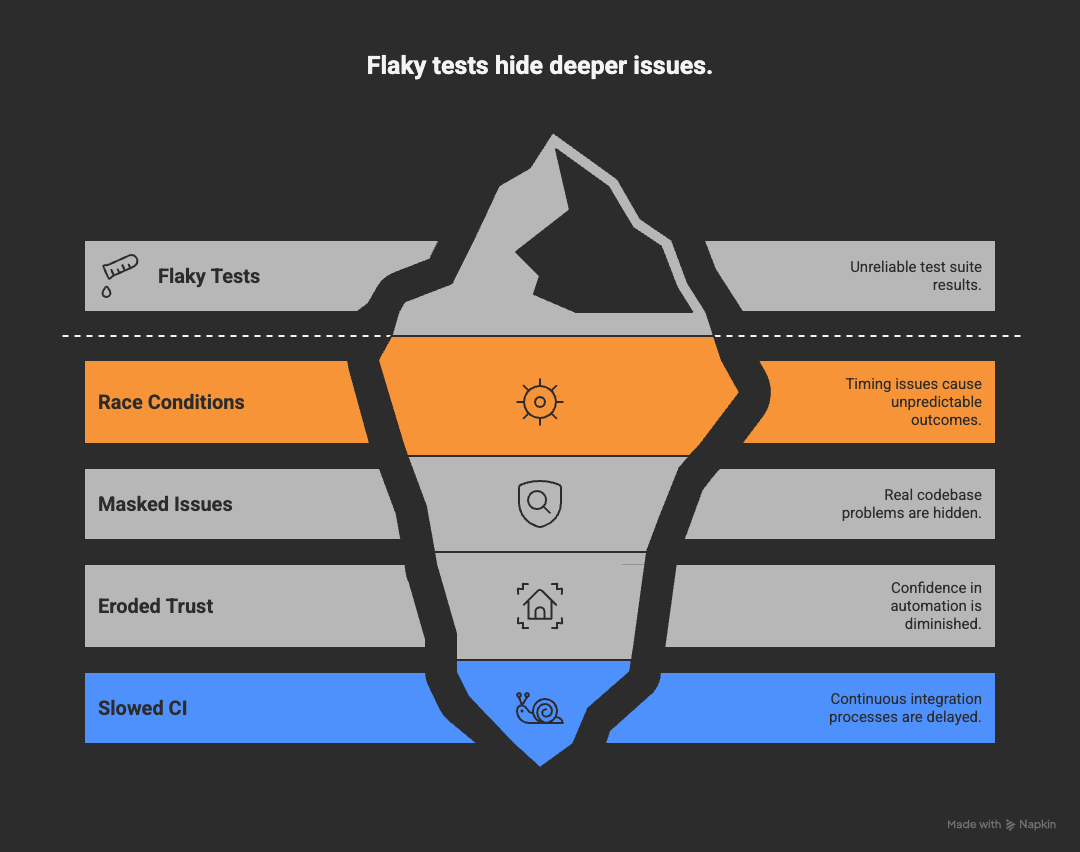

Flaky tests are a common challenge in software development, often leading to unreliable test suites and wasted time debugging. They're dangerous because they can mask the real issues in your codebase, leading to a false sense of security. This erodes trust in test automation and can slow down continuous integration processes. One of the primary cause of flaky tests is race conditions, which occur when the timing of events leads to unpredictable outcomes.

A race condition occurs when two or more processes or threads attempt to change shared data at the same time. This can lead to unpredictable behavior and bugs that are difficult to reproduce. In the context of testing, race conditions can cause tests to pass or fail intermittently, depending on the timing of events.

What is a Race Condition in Test Automation?

In test automation, race conditions can occur when tests are not properly synchronized with the application state. This is especially common in asynchronous web applications, where the user interface (UI) may be updated independently of the underlying network requests. For example, a test might attempt to click a button before it is fully rendered or enabled, leading to intermittent failures.

Deterministic vs Non-Deterministic Outcomes:

- Deterministic Outcomes: These occur when tests consistently produce the same results under the same conditions. For example, if a test always passes when a button is clicked after it is fully loaded, it is considered deterministic.

- Non-Deterministic Outcomes: These occur when tests produce different results under the same conditions. For example, if a test sometimes passes and sometimes fails when a button is clicked before it is fully loaded, it is considered non-deterministic.

To address race conditions in test automation, it is essential to implement strategies that ensure tests are executed in a controlled and predictable manner.

Symptoms You’ll See

- Intermittent Test Failures: Tests that pass and fail unpredictably.

- Timing Issues: Tests that fail due to timing issues, such as waiting for elements to load or become interactive.

- Inconsistent Test Results: Tests that produce different results when run multiple times.

- Resource Contention: Tests that fail due to contention for shared resources, such as databases or file systems.

- Order-Dependent Failures: Tests that fail when run in a specific order but pass when run independently.

- Environment-Specific Failures: Tests that pass in one environment (e.g., local) but fail in another (e.g., CI/CD pipeline).

Error Messages

- Timeout 30000ms exceeded while waiting for element to be clickable

- Element not visible / not attached to the DOM

- Stale Element Reference: The element you are trying to interact with is no longer attached to the DOM.

- Element is not interactable

- Network request failed or timed out

- Database deadlock or timeout errors

- File access errors (e.g., file is locked or in use by another process)

- Unexpected application state (e.g., modal not open, form not submitted)

Common Root Causes

AJAX/Fetch Calls Still Pending

When tests interact with web applications that use AJAX or Fetch API for asynchronous data loading, they may attempt to interact with elements before the data has fully loaded. This can lead to tests failing because the expected elements are not yet present in the DOM.

Animations/Transitions

Animations and transitions can cause elements to be in an intermediate state when tests attempt to interact with them. For example, a button may be in the process of fading in or sliding into view, making it unclickable at the moment the test tries to click it.

Stale Elements

React/Vue/Angular re-render detached DOM nodes. When the application state changes, elements that were previously referenced in the test may no longer be valid. This can lead to tests failing with "stale element" errors when they try to interact with these outdated references.

Race in Assertions

Assertions that depend on the timing of events can lead to flaky tests. For example, asserting that an element is visible immediately after triggering an action may fail if the element takes time to appear.

Sample test failing because of a race condition

test('should display user profile after login', async () => {

await page.goto('https://example.com/login');

await page.fill('#username', 'testuser');

await page.fill('#password', 'password');

await page.fill('#otp', '123456');

// if the login button is not yet clickable, this will fail intermittently. Since the OTP needs to be verified and then the button becomes clickable.

await page.click('#login-button');

});

Why it fails? The test attempts to click the login button immediately after filling in the OTP. If the button is not yet clickable due to ongoing verification processes, the test will fail intermittently.

How does this test become flaky? Sometimes it works because the button becomes clickable quickly enough, but other times it doesn't, leading to inconsistent results. This depends on factors like network latency, server response times, and client-side processing speed.

Best Practices to fix the flakiness

Use Explicit Waits

Explicit waits allow you to wait for specific conditions to be met before proceeding with the test. This can help ensure that elements are fully loaded and ready for interaction.

await page.waitForSelector('#login-button:enabled');

await page.click('#login-button');

Wait for Network Idle

Waiting for network idle ensures that all network requests have completed before proceeding with the test. This can help prevent issues related to pending AJAX or Fetch calls.

const [response] = await Promise.all([

page.waitForResponse(/api\/otp-verify/),

page.click('#login-button')

]);

Assertions with Retries

Using assertions with retries can help ensure that the expected conditions are met before proceeding with the test. This can help prevent issues related to timing and state changes.

await expect(page.locator('#login-button')).toBeEnabled();

Disable Animations in Test Env (Least recommended)

CSS overrides can be used to disable animations during testing. This can help ensure that tests run consistently without being affected by animations or transitions.

* { transition-duration: 0s !important; animation: none !important; }

Anti-Patterns to Avoid

Fixed Delays

await page.waitForTimeout(5000);

Using fixed delays can lead to unnecessarily long test execution times and may not reliably account for varying network conditions or application states. Even though it might work seamlessly in local dev environment, it can lead to flaky tests in CI/CD pipelines where network latency and server response times can vary significantly. And this is the most common anti-pattern I have seen in many test suites during parallel executions.

Chained locators without context

await page.click('div >> text=Submit');

Using chained locators without context can lead to brittle tests that are prone to breaking with minor UI changes.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?

Best Practices to Prevent Race Conditions

Using Stable locators

Using stable locators such as data-testid attributes can help ensure that tests are resilient to UI changes. This can help prevent issues related to element identification and selection.

Wrap waits with semantic meaning

Using semantic waits can help ensure that tests are more readable and maintainable. This can help prevent issues related to timing and state changes.

await page.waitForSelector('#login-button:enabled', { state: 'visible' });

await page.click('#login-button');

Monitor flaky test rate in CI

Monitoring flaky test rates in CI can help identify and address issues related to test reliability. This can help ensure that tests are consistently passing and failing as expected.

Do not ignore flaky tests, instead quarantine them and fix them as soon as possible.

Conclusion

Flaky tests implies broken trust in test automation. They can lead to wasted time debugging and erode confidence in the test suite. By understanding the root causes of flaky tests, particularly those related to timing and synchronization issues, we can implement best practices to mitigate their impact and improve the overall reliability of our test automation efforts.